Using Databricks SQL in VSCode

Recently, I had the opportunity to explore the Databricks SQL extension for VSCode, and I was thoroughly impressed.

In December 2022, Databricks launched the Databricks Driver for SQLTools extension, and although it is still in preview, the features are already good and useful.

For data analysts, report developers and data engineers, having the ability to execute SQL queries against Databricks workspace objects is crucial for streamlining workflows and making data analysis activities much more efficient and quicker. The Databricks SQL extension for VSCode provides just that, with a simple and intuitive interface, this extension makes it easy to connect to Databricks workspace and run SQL queries directly from VSCode.

Getting Started

First you need to install the Databricks Driver for SQLTools extension, which is available in the Visual Studio Marketplace. Then set up the Databricks connection to your workspace using the Databricks Host, Path, and PAT Token. The following blog by Ganesh Chandrasekaran will give you the required steps to get started.

Once you have the extension and connected to Databricks workspace, you can start querying your Databricks workspace using SQL directly from VSCode.

Features

Some of the features, I liked…

Viewing Objects

You have the ability to view all Databases, Tables, Views and Fields that are in the Databricks workspace, under the CONNECTIONS panel (left-hand). This is similar to SQL Management Studio or Azure Data Studio, making it easy to use for those that are familiar with SQL databases.

Viewing Multiple Databases

All the Databases in the Databricks workspace is shown, therefore you can query and view multiple databases in one application. The SQL Editor in Databricks SQL workspace, only allows you to view a single database at a time, therefore when working with multiple databases you must switch between them.

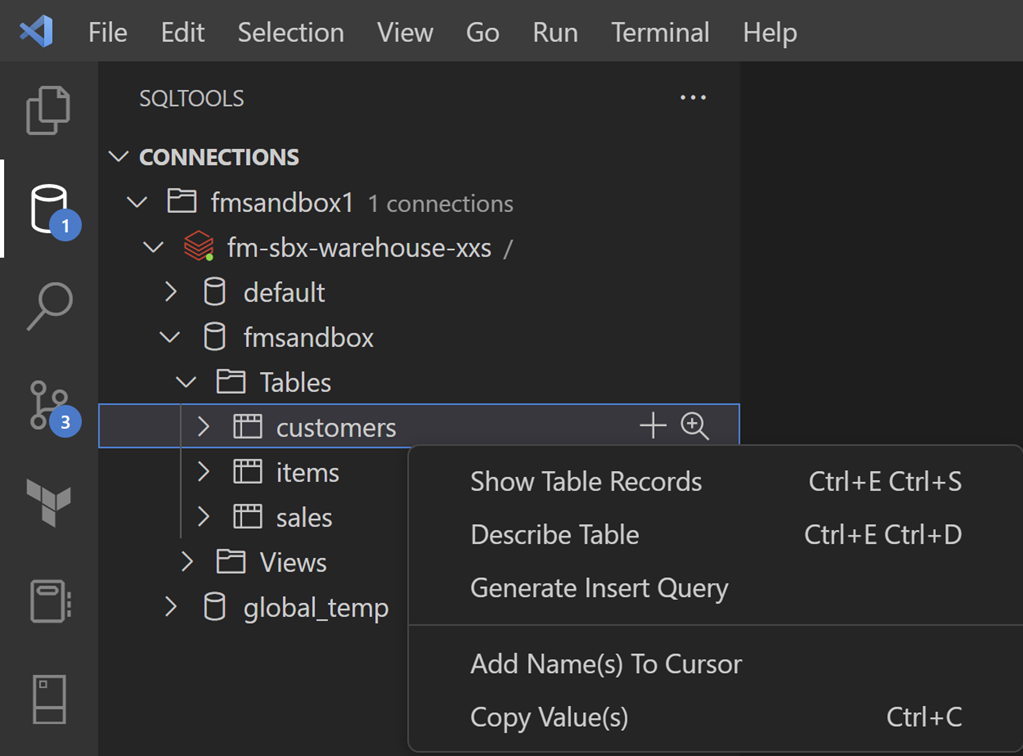

Generate Options

You can generate table records, metadata and insert statements, without having to write any code using the right-click option.

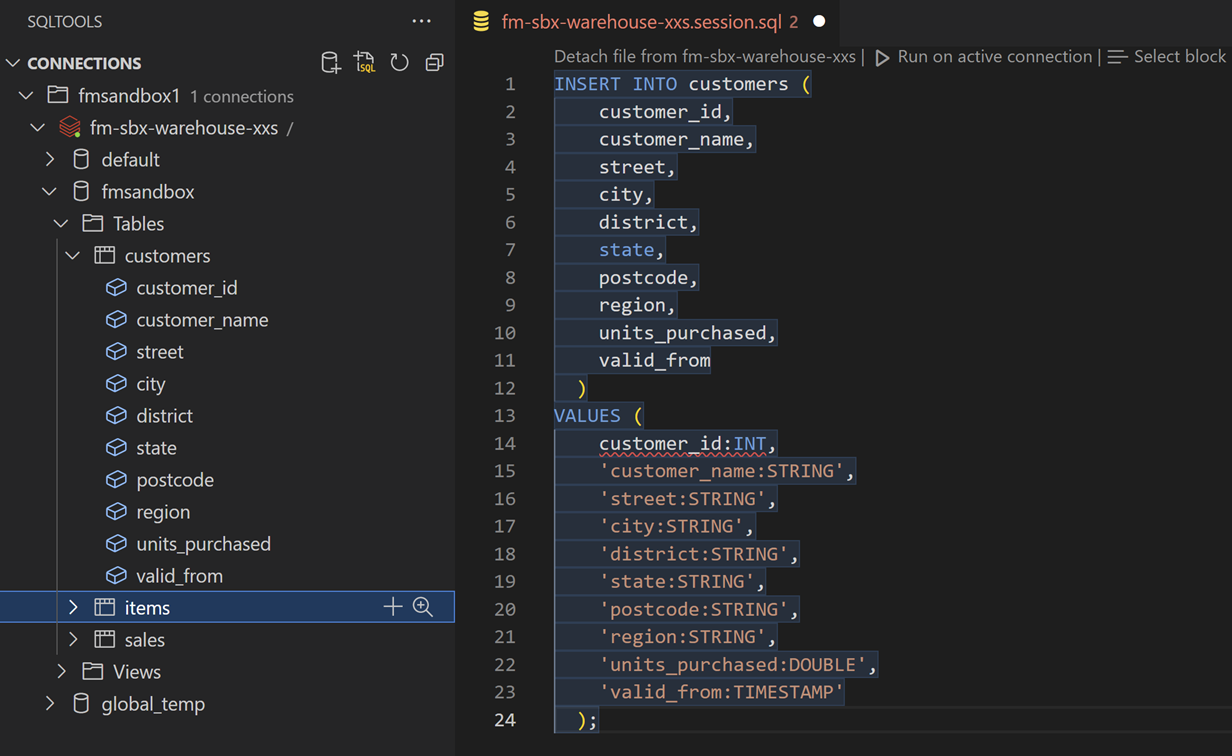

By right clicking the table you can select Show Records, Describe Table, Generate Insert Query or using the Plus and Magnifying Glass icons.

The Show Records option - selects 50 records from the table.

The Describe Table option - show the table metadata information, including the column name and type.

The Generate Insert Query option - generates an insert statement for adding data into tables quickly.

And finally, the Add Name(s) to Cursor option - allows you to add the table or fields names to the script.

Query Results

When you run a SQL query, the results are displayed in a new tab. Each time a query is executed a new tab is opened, meaning the previous execution results is still available which can be beneficial when debugging queries.

If you’re like me and prefer the result being displayed at the bottom, then you can drag the result tab to the bottom or use the Split Editor option.

IntelliSense

It supports intellisense, so real-time suggestions and prompts are given which makes writing the queries much quicker and easier. This helps eliminate syntax errors, saving time and effort in writing queries and enhances overall productivity.

Summary

Overall, running Databricks SQL queries in VSCode allows you to streamline data analysis processes and make it more efficient.

With its user-friendly interface, robust features, and seamless integration with Databricks, this extension is the ideal solution for querying your Databricks Lakehouse outside of the Databricks ecosystem.

The extension is a great choice for data analysts and report developers, as it gives them access to the Databricks workspace without the need for direct access, which opens up new opportunities for collaboration and faster insights.

The similarity to other SQL query editors makes this extension a great choice for those who are already familiar with SQL databases, this familiar interface makes it easy to get started and saves valuable time when working with Databricks Lakehouse.

The Databricks Driver for SQLTools extension is a good tool for anyone working with data in Databricks Lakehouse. I’m sure there will be more exciting features to come in the future, so it's definitely worth keeping an eye on it.